Opposite of Arbitrary and Capricious

John Shenot is a senior advisor to the U.S. program at the Regulatory Assistance Project, an independent, global, non-governmental organization advancing policy innovation and thought leadership within the energy community. A former staffer with Wisconsin’s Public Service Commission and Department of Natural Resources, he advises state utility commissions and environmental regulatory agencies throughout the U.S. on public policy best practices.

Chris Neme is a principal and cofounder of Energy Futures Group, a clean energy consulting firm. He has worked with clients in more than thirty states and abroad on issues including energy efficiency, demand response, strategic electrification and distributed energy resources. He co-authored the 2017 National Standard Practice Manual for Assessing Cost-Effectiveness of Energy Efficiency Resources, and the 2020 National Standard Practice Manual for Benefit-Cost Analysis of Distributed Energy Resources.

Everyone who works in utility regulation understands that decisions will not hold up in court if they are deemed arbitrary and capricious. We hear it all the time. But how can regulators avoid this pitfall? Well, according to a popular online thesaurus, the opposite of arbitrary can be summed up in a few key words: logical, reasonable, and consistent.

How do regulators evaluate distributed energy resources? For decades, state public utility commissions have ordered utilities and third-party energy efficiency program administrators to use benefit-cost analysis (BCA) techniques to determine if those programs are cost-effective. An increasing number of commissions are now requiring the use of BCAs to evaluate other distributed energy resources (DERs) and even some types of utility infrastructure investments, such as advanced meters.

A landmark 2020 document, the National Standard Practice Manual for Benefit-Cost Analysis of Distributed Energy Resources (NSPM) offers regulators and others a set of fundamental principles that can guide their decisions about how to conduct BCAs and which cost tests to use in their jurisdiction.

Different tests consider cost-effectiveness from different perspectives: the utility paying for the program (a utility cost test); all parties incurring costs for the program, typically the utility plus participants (a total resource cost test); society as a whole (a societal cost test); or regulators or policymakers seeking to achieve a given state's unique public policy goals (a jurisdiction-specific test).

Each BCA test evaluates a unique combination of cost categories and benefit categories that are relevant when considering value from the chosen perspective. Each test provides useful information, and some jurisdictions consider the results of multiple tests, but most rely on just one as their primary test for decision-making purposes. One of the core principles of the NSPM is that the primary test should be jurisdiction-specific, based on the jurisdiction's policy goals.

Chris Neme: A new handbook by the National Energy Screening Project as a companion to the NSPM offers tips on how to quantify inputs to a BCA.

Chris Neme: A new handbook by the National Energy Screening Project as a companion to the NSPM offers tips on how to quantify inputs to a BCA.

That may be the same as one of the other traditional tests if that's what policy goals dictate. However, it will more typically be a unique test that combines several perspectives relevant to those goals. (The NSPM includes detailed explanations of how the various cost tests differ, as well as a step-by-step process regulators can use to design a jurisdiction-specific test.)

Another fundamental principle suggested by the NSPM (one of eight principles covered by the manual, but the one focused on here) is to compare DERs to each other and to utility-scale energy resources using consistent methods and assumptions.

What Does Consistent Mean?

When it comes to BCAs, there are three key aspects of being consistent. All three aspects must be considered if one hopes to optimize investment decisions across all types of resources.

Consistent test: The first aspect of consistency is to use the same cost-effectiveness test for all DERs (or, ideally, for all resources whether distributed or not). Most state utility commissions have formally adopted, through rules or orders, a primary test for use in evaluating efficiency programs but have been far less formal in deciding which tests to use for evaluating other DERs.

Figure 1 - Range of Utility Planning Activities, and Figure 2 - Hypothetical Rooftop Solar & Efficient Central A/C

Figure 1 - Range of Utility Planning Activities, and Figure 2 - Hypothetical Rooftop Solar & Efficient Central A/C

A single primary test can be selected or designed for use with all DERs, with the understanding that for some categories of value, some resources may create zero costs and/or zero benefits. For example, energy storage resources can provide a wide range of ancillary service benefits while efficiency measures typically cannot.

But that doesn't mean we should use a different test for these two types of resources; it just means that when we evaluate most efficiency measures, the ancillary service value will be zero. Similarly, some efficiency measures, such as efficient air conditioners, provide peak demand benefits (in a summer peaking state) while others such as efficient streetlights do not. Again, that doesn't mean to use a different test for different measures, just that the value of avoided capacity costs of street lighting measures will be zero.

Consistent methods: The second aspect of consistency is to strive to use identical data sources, formulas, and methods in all BCAs. This does not mean that the value of benefits or costs will be identical for different distributed resources. For example, the average value of avoided energy costs for a distributed solar program may be different than the average for a water heater efficiency program because the savings have different seasonal and different hourly profiles.

However, the approach to estimating the seasonal and hourly values of energy savings should be the same across all DERs. The same should be true for valuing avoided capacity costs, valuing carbon-emission reductions, choice of discount rate, and all other assumptions relevant to a jurisdiction's cost-effectiveness test.

John Shenot: Wherever a comprehensive review of BCA practices is not considered realistic, note that progress toward consistency can be made incrementally.

John Shenot: Wherever a comprehensive review of BCA practices is not considered realistic, note that progress toward consistency can be made incrementally.

This does not mean that outdated values should be used in a BCA done today to make it consistent with one done two years ago or even to forgo using a new methodology for estimating values that is expected to be better than older methodologies.

The best information available should always be used to assess cost-effectiveness. However, that best information should be applied consistently — on a forward-going basis — to cost-effectiveness assessments of all DERs. (A new handbook published by the National Energy Screening Project as a companion document to the NSPM offers practical tips on how to quantify inputs to a BCA.)

Consistent use of BCA: The third aspect of consistency is to incorporate BCA methods into a wide range of regulatory proceedings in which utilities make investment plans. This includes not only DER program plans, but also long-term distribution system plans and bulk power system plans, as shown in Figure 1.

Although these planning activities are typically conducted separately, DERs offer potential solutions to distribution system and bulk power system needs, and the best way to maximize the benefits of utility investments is to consistently apply BCA methods to all types of planning processes. Using BCA in just one of these planning processes, or none at all, or taking a different approach to BCA in each planning process will lead to suboptimal results for utility shareholders and ratepayers.

Is Inconsistency a Problem?

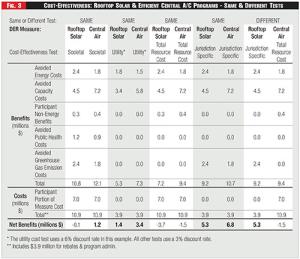

Figure 3 - Cost-Effectiveness: Rooftop Solar & Efficient Central A/C Programs - Same & Different Tests

Figure 3 - Cost-Effectiveness: Rooftop Solar & Efficient Central A/C Programs - Same & Different Tests

Serious problems can arise from using inconsistent tests, methods, or assumptions. Most important, the result of inconsistency can be over-investment in less cost-effective resources and under-investment in more cost-effective resources.

To illustrate this point, we present a hypothetical example where a jurisdiction is evaluating the cost-effectiveness of potential investments in two residential rebate programs. The first, a rooftop solar program, would offer a three-thousand-dollar rebate to a thousand participants; the second, for efficient central air conditioners, would offer a three-hundred-dollar rebate to ten thousand participants. In both cases, program administration costs account for thirty percent of the rebates. Other assumptions about these two programs are presented in Figure 2.

In this hypothetical jurisdiction, established public policies focus on just utility system impacts and societal climate impacts, so neither participant impacts nor air pollution-related public health impacts are included, but a monetary value is attached to avoiding carbon dioxide emissions. In other words, the jurisdiction-specific test is equal to the utility cost test plus the impacts on carbon dioxide emissions.

As Figure 3 shows, when these assumptions are used, and each DER is analyzed using the same cost-effectiveness test, the efficient central air conditioner rebate program is more cost-effective than the rooftop solar program regardless of which cost test is used.

(For simplicity, we assume in this hypothetical scenario that the two measures under consideration have identical — and relatively small, at five percent — participant nonenergy benefits as a percentage of avoided utility system costs. Those benefits are included in the total resource cost and societal cost tests, but not the utility cost test or this hypothetical jurisdiction-specific test.)

However, what if our hypothetical jurisdiction used different tests to evaluate these two different types of DERs? Specifically, what if they analyzed the rooftop solar program using a jurisdiction-specific test, but used a total resource cost test to evaluate the efficient central air conditioner program?

As shown in the last two columns of Figure 3, the rooftop solar program not only looks much better, but it appears to be the only one of the two programs worth pursuing.

Thus, a jurisdiction that used these different tests to assess the economic merits of the two programs would likely invest substantial resources into the rooftop solar rebate program and nothing into the efficient central air conditioner program — even though the latter program produces more system cost savings per ratepayer dollar spent (the utility cost test), more societal cost savings per societal dollar spent (the societal cost test) and has a lower cost per ton of carbon emission reduction (all tests). Put simply, the use of different cost-effectiveness tests to assess each DER program could result in decidedly uneconomic decisions.

Note that both the measure/program characteristics and avoided costs used are purely hypothetical values; they are realistic for some U.S. jurisdictions but are not derived from current actual data in any specific region.

Also, our example is over-simplified in some ways that might be significant for an actual program evaluation (such as it uses identical values for avoided energy costs despite the two types of DERs having different hourly load profiles, identical rebate levels as a percent of incremental measure cost, etc.).

Different assumptions could potentially produce the opposite result — that the rooftop solar program is more cost-effective under every test but looks less cost-effective when analyzed using a different test than the efficient central air conditioner program. But this does not change the fundamental point of the example — that it is important to use a consistent test across all DER and resource assessments.

How to Go from Status Quo to Consistency?

States that want to improve the consistency of their BCA practices have two options. First, they could try to change everything all at once — the whole enchilada. A few states have opened big proceedings to develop (or consider developing) consistent BCA methods for use in the future across multiple (or all) resource types. Notable examples include California, New York, Maryland, and Washington.

Alternatively, the second option is to incrementally progress toward consistency, one docket at a time. Every proceeding in which BCA results are used presents an opportunity to increase consistency. Many types of proceedings, such as those where utility energy efficiency programs are approved, come up on a regularly scheduled basis in some states. Others could arise unpredictably, such as when a utility requests pre-approval for a grid modernization investment or a new energy storage project.

Regardless of the option selected, regulators should anticipate there will be significant challenges to overcome. Two are covered here.

In some states, legislation might have to be changed to allow for true consistency. For example, Illinois law specifies that the cost-effectiveness of efficiency programs shall be determined using what it calls a total resource cost test (though its statutory definition is more expansive than in other jurisdictions that nominally use this type of test).

The law doesn't specify any particular cost test for other resource types, so Illinois regulators could apply the total resource cost test consistently to the evaluation of all resources. But if they determined some other test would best satisfy the NSPM principles, they couldn't use it for efficiency without a change to state legislation. Lawmaking requires time and regulators cannot control or dictate the outcomes of any request for new legislation.

In most states, prior utility commission rules or orders from multiple dockets may have to be revisited and superseded. For a variety of reasons, it might not be practical to revise multiple rules or policies all at once or with immediate effect. For example, utilities or third-party DER program administrators may be in the midst of implementing programs they designed in accordance with past commission decisions about BCA tests or methods.

Performance incentives or non-compliance penalties that are tied to estimates of net benefits could be at stake. In these cases, commissions shouldn't change the rules in the middle of the game, but can change the rules for future games to promote consistency.

The Benefits of Consistency

Consistent methods and assumptions will lead to improved decisions about allocation of scarce utility resources, resulting in better protection for ratepayers, lower risks for utility shareholders, and better outcomes for society.

We do not minimize the challenge of achieving consistency. It will require significant effort by commissions and the parties that appear before them, and it could take a long time.

Wherever a comprehensive review of BCA practices is not considered realistic for whatever reason, note that progress toward consistency can be made incrementally. Regulators don't have to achieve consistency comprehensively and all at once, but that should be the goal and if they keep their eyes on the prize, they can get there eventually.